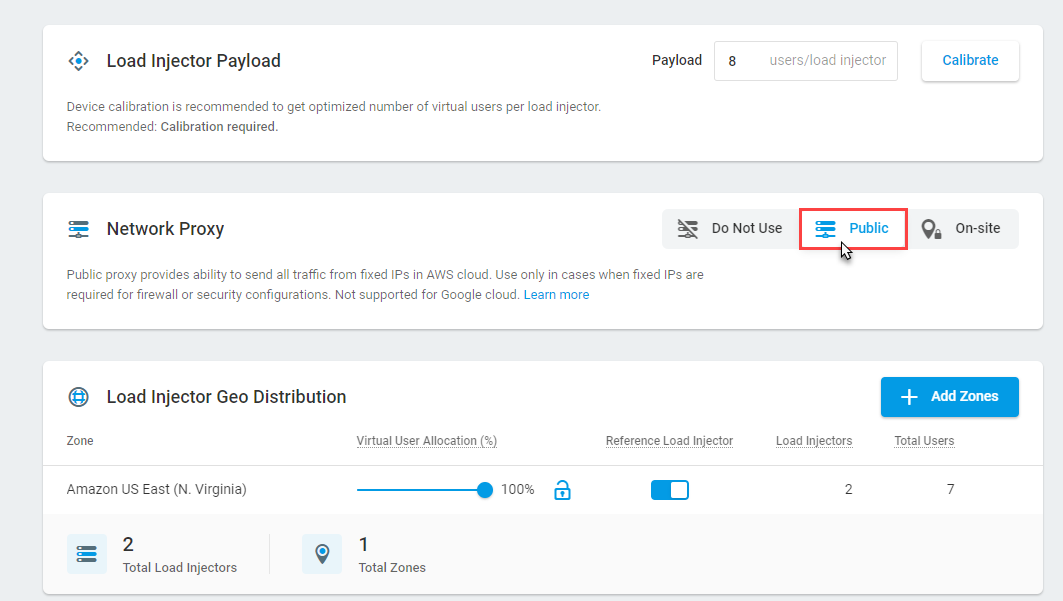

You must have a pool of at least 10 IPs before making an HTTP request. So, for every successful scraping request, you must use a new IP for every request. If you keep using the same IP for every request you will be blocked. This is the easiest way for anti-scraping mechanisms to caught you red-handed.

If you keep these points in mind while scraping a website, I am pretty sure you will be able to scrape any website on the web.

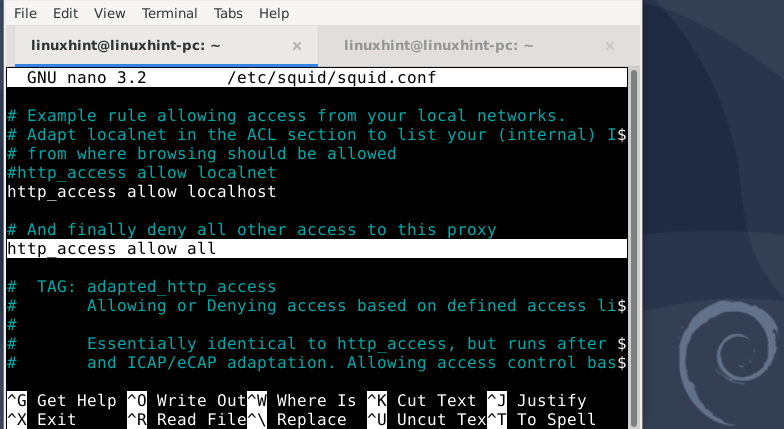

Maybe you are using a headerless browser like Tor Browser If you are scraping using the same IP for a certain period of time.Like for example, you are going through every page of that target domain for just collecting images or links. Following the same pattern while scraping.If you are scraping pages faster than a human possibly can, you will fall into a category called “bots”.Points referred by an anti-scraping mechanism: Sometimes certain websites have User-agent: * or Disallow:/ in their robots.txt file which means they don’t want you to scrape their websites.īasically anti-scraping mechanism works on a fundamental rule which is: Is it a bot or a human? For analyzing this rule it has to follow certain criteria in order to make a decision. One can find robots.txt file on websites. Many websites allow GOOGLE to let them scrape their websites. This file provides standard rules about scraping. This is used mainly to avoid overloading any website with requests. So, basically it tells search engine crawlers which pages or files the crawler can or can’t request from your site. ROBOTS.TXTįirst of all, you have to understand what is robots.txt file and what is its functionality. One thing you have to keep in mind is BE NICE and FOLLOW SCRAPING POLICIES of the websiteīut if you are building web scrapers for your project or a company then you must follow these 10 tips before even starting to scrape any website. Many websites on the web do not have any anti-scraping mechanism but some of the websites do block scrapers because they do not believe in open data access.

Real proxy list text file free#

There are FREE web scrapers in the market which can smoothly scrape any website without getting blocked. It could have negative effects on the website. You have to be very cautious about the website you are scraping. Data Scraping is something that has to be done quite responsibly.

0 kommentar(er)

0 kommentar(er)